An argument as to why chess engines should only be supplementary to human analysis

You'll have heard this advice before. You should analyse your games yourself first, or with your coach (if you have one) or your peers, before then subjecting them to the verdict of stockfish. The reason for this is that such analysis sharpens your skills, especially when done with a stronger player. Stronger player? Humans can't beat engines. Not even Magnus Carlsen. So why delay asking the engine, the ultimate stronger player? The best reason is that human beings think and understand in general terms, concepts, rather than concrete analysis. And if you can't put into words what went wrong in a game you lost beyond 'I missed something', then you've little prospect of learning from your mistakes.

That's not to say there isn't plenty to be gained from analysing our games with an engine after we've put the effort into doing it for ourselves. However, we must be constantly alert to the reality that the likes of Stockfish are chess engines, not truth engines. They're not infinite minds. Yes, computer chess may now be superior to human chess in all but an aesthetic sense, but humans don't play computer chess. And what is produced by engines is not necessarily useful for human players.

A chess engine is a tool. A tool is only as good as its user

In a recent Chessbase article entitled 'Typical mistakes when using an engine', Jan Markos explores the issue of errors made when relying on computer assistance. Those mistakes are:

Underpinning both observations is a concession that engines seldom get things wrong. That's not the problem - engines are always right on engine terms. The problem, rather, is that the engine's evaluations, taken at face value, allow human operators to mislead themselves. Here are a selection of instances demonstrating the potential difficulties in the human-machine relationship.

(The following games are all analysed by the Stockfish 14 feature built-in to the playing site lichess.org. Note: those wanting an explanation as to why engines make the choices they do - how they actually work - will have to go elsewhere. Such things are well outside my realm of expertise. However, readers not aware of the fundamental issues could do worse than to start here https://en.m.wikipedia.org/wiki/Horizon_effect.)

Engines are counting machines. Sometimes they just count the pieces

The engine calculates and then assesses and reassesses move by move, whereas the human player finds permanent features of a position to hold on to. An example:

White is a pawn up, but black's position is a fortress and the game a draw. The black king and bishop can keep white's king locked out of the black position across the board. Providing the black bishop keeps the white king out of e5, the white king cannot infiltrate the kingside. Providing the black king gets to d7, from where it can reach b7, the white king cannot assist the b-pawn to the queening square (play it out for yourself). Nevertheless, the engine, at depth 33/33, evaluates it at +0.7. An evaluation that, taken at face value, would give the casual user false hope white has winning chances. The human player should recognise the position as a draw despite black's material deficit. The human analyst might discover it's a draw simply by playing it out. But because white has an extra pawn, the engine declares a white advantage.

Similar engine evaluations do not have the same weight

Contrast the +0.7 in our previous example with this position:

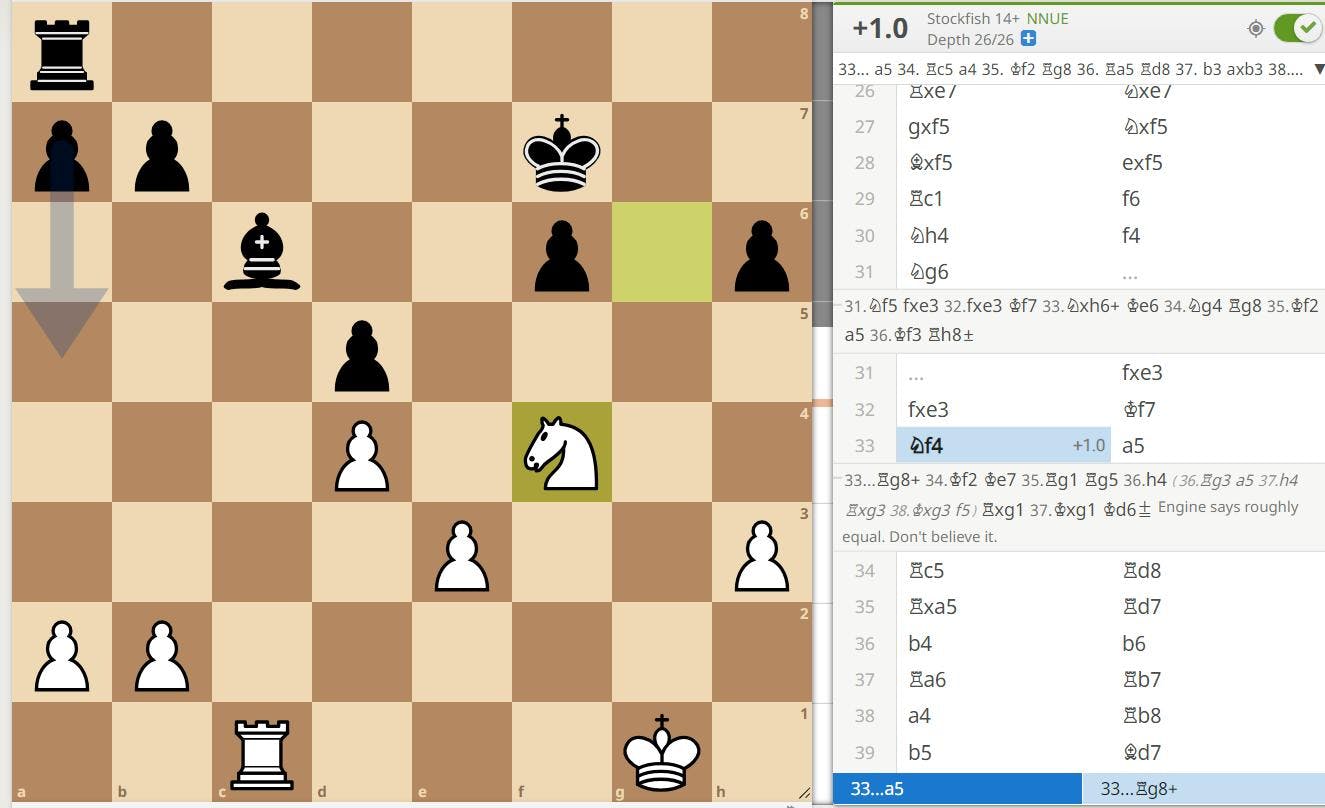

Here an evaluation of +1.0 at 26/26 belies the truth that black's position is horrendous. He's facing a classic good knight vs. bad bishop scenario in which he could be tortured all night long. All the bishop can do is defend the d5 pawn, whereas the knight is free to both manoeuvre and attack pawns.

Black's position is so bad that the engine suggests what a GM almost certainly never would: 33...a5 and give himself two weaknesses on a5 and d5. (Two weaknesses are bad because while you can defend one weakness, defending two stretches your position to breaking point.) This speaks to Markos's point about engines not favouring natural human moves.

The engine can pronounce equality despite one side having no play

Here Karpov offered an ingenious exchange sacrifice against Gelfand at Linares in 1993:

After 20. Rd5

Switching on lichess's time consuming 'go deeper' analysis option, the engine calls it half a pawn better for white. Black will win the exchange, but his rooks will be tied down to preventing the d-pawn moving forward. His dark square bishop just has nothing to do, and his broken kingside pawns, where once they offered the rooks a half-open g-file, are nothing but a liability. He has no obvious plan to solve these problems.

White, on the other hand, by virtue of the resulting pawn on d5, and by moving the Rook to d1 and the bishop to c4, will achieve an iron grip on the light squares in the middle of the board. He then has the eventual luxury of manoeuvring his knight.

Should black ever give back the exchange in order to break white's light square control, he risks falling into a good knight vs. bad bishop scenario like in our previous example. In short, black, upon taking the rook (and it's not clear what else he can do, as the white knight will be gumming up the whole position) will have a material advantage but nothing else.

Let's wind the game on a bit and forgo the time (and CPU) consuming 'go deeper' option:

Four moves have passed. Along the way, potentially deceptive fluctuations in the engine evaluation have occurred, and yet all that has really happened is that our prediction for the position has been realised: Black has captured the rook and, as a consequence, white has a stranglehold on the light squares. Yet the engine has changed its mind - the position is now equal! Further observe the line the engine gives in the screen capture above.

No strong human player, recognising the strength of their position is the light square hold on the centre, is going to play the line 25. Qh4 Bg5 26. Qxh7 Rxd5 27. Rxd5 Qxd5 28. g3, as it allows that grip to be broken after 28... c4. This, again, proves Markos's point that engines don't necessarily favour natural human moves.

Instead, 26. Qg3! (Pinning the rook) h5 27. h4 leaves black with the problems: no plan, only potential pawn weaknesses on the kingside. Despite the fluctuation in the engine's evaluations between move twenty and move twenty-four, the critical moment was evidently before 20. Rd5, which conforms to Markos's point two about engines. In fact, we can go further and state the obvious: engines are not interested in the critical moment. To a machine, every move is just another move.

What is the absolute truth of the position after Karpov's initial offer of the exchange? Only an infinite mind could tell you. But it is true to say white should feel optimistic in the resulting position at move twenty-four, because in practice only white has any hope of doing something productive. And if white has hope of doing something productive, then what use is the engine's verdict of equality?

If we had been black in this game we could use that evaluation to console ourselves, but it's hard to see what good that would do us.

(White won)

Engines sometimes need a human helping hand (and not just to switch them on and off)

Frank Marshall - Jose Raul Capablanca, New York 1909.

White to move

Here, the engine picks 16. Rfd1 as its primary candidate move and gives it an evaluation of -0.3. However, cut stockfish off a bit too soon, before it reaches an adequate depth, and it produces a quite different move.

A game to play is try and correctly match the following four possible candidate moves a) 16. Rfc1 b) 16. h4 c) 16. e4 d) 16. Qxb7 with the following four options: 1) Stockfish's initial suggestion. 2) Marshall's actual move. 3) A further alternative to Marshall's move given in Shereshevsky's Endgame Strategy. 4) the strongest move.

Try it yourself.

If you said a=2, b=1, c=3, and d=4, bravo. However, these days most players with even a passing familiarity with computer chess can identify 16. h4 as an engine move. Indeed, the engine's fondness for pushing the h-pawn has resulted in more and more GMs pushing their h-pawns in situations where previously they would have left them untouched. Here, however, it doesn't make much sense. It's about as sensible as white giving up the light squares would have been in the Karpov game. 16. h4 isn't going to compromise black's kingside to any effect because black's queenside pawns are too fast on the other side of the board.

Shereshevsky's 16. e4 is similarly ineffective. Both sides can push their respective pawn majorities, but, again, black's queenside three are much faster than white's queenside four, especially with the two kings stick on the kingside. In a pawn race, white loses. Marshall's 16. Rfc1 was too passive in the game, and the engine's 16. Rfd1 leaves white slightly worse because it doesn't slow black's queenside for very long. Move sixteen is the critical position. It's now or never.

Which brings us to the strongest move: 16. Qxb7. Knowing how the game went from 16. Rfc1, with the benefit of hindsight we might ask why white doesn't destroy black's queenside majority with 16. Qxb7. Clearly Marshall and subsequent analysts were afraid that after 16... QxQ 17. BxQ Rab8 black would take on b2 and dominate the second rank. But if you force the engine to play 16. Qxb7 and follow that line, a critical change instantaneously occurs and the engine declares the position equal, owing to: 18. Bf3 Rxb2 19. Rfb1 Rfb8 20. RxR RxR and then

After 21. Bd5!!

The result is an equal rook and pawn ending, owing to black's weak back rank. The engine's initial reluctance to play 16. Qxb7 goes to show that it will sometimes prefer meaningless or ineffectual moves over the best moves, and it needs an alert human operator to steer it in the right direction.

We're unlikely to become Grandmasters, we can never be an engine

Human players, owing to our different strengths and limitations, as well as the awareness we're in a battle with another human being, aim to exert control over a game. We're interested more in utility than objectivity. In other words, we humans are all pragmatists.

The engine is objective.

Steve Kee - Roger Williamson Wiseman-Hurley Open, 2022.

After 10.e5?!

The choice for black is between 10...Nxe5 or 10...Bxe5. According to the engine, they're both good. I played Nxe5 because it both preserved the bishop pair and was the easier one to calculate to a stable yet slightly advantageous position for black.

Contrast that with what might happen after 10...Bxe5 11. Qxd8 Rxd8 12. Nxe5 Nxe5 13. Bf4:

I find that unopposed bishop on f4 worrying - wrongly, as the engine reassures me. But I'm not strong enough to convince myself that the annoyance it represents after 13...Nd3 14. Bxc7 Rd7 and either 15. Bf4 or 15. Ba5 makes the above line worthwhile. The game clock ticking, knowing I'm already exploring the outer boundaries of abilities, just how much more precious time do I want to spend trying to go further down these lines at the board? After 15. Ba5, take a look at this line that the engine evaluates as giving white a respectable advantage:

Moves like the consolidating 18... Bd5 may be easy to find once you arrive at move eighteen, but they are not easy to see from move ten, or twelve, or even fourteen. Indeed, a GM might be able to see up to 18... Bd5 from move fifteen and be comfortable white's bishops aren't going to hurt them, and still decide against 15... Bxe5 on the grounds of expediency, as 15... Nxe5 offers greater certainty.

The engine, however, simply does not care. 15... Bxe5 is better, 15... Bxe5 it plays.

Why do we so often rely solely on the engine?

Getting a machine to do the post-game analysis for us merely makes us aware we're all too human. So why have we all (quite regularly, for many of us) mindlessly let the engine do our job for us and then uncritically reported its findings to anyone who'll listen, especially after a painful defeat? The answer is unflattering. Yes, we're interested in approximating the truth of the game we played. And, obviously, it's easier to let the engine do that alone. But it's also less emotionally taxing to be judged by a machine rather than by another person - or, worse, by our own selves. In this way the engine becomes a means of pre-empting both criticism and self-criticism. And yet without human reflection, criticism and self-criticism, there can be no human self-improvement.

You could ask the engine whether or not that's the case. Just don't expect a reply.